Enabling Multimodal User Interactions for Genomics Visualization Creation

Published in IEEE Visualization and Visual Analytics (VIS), 2023

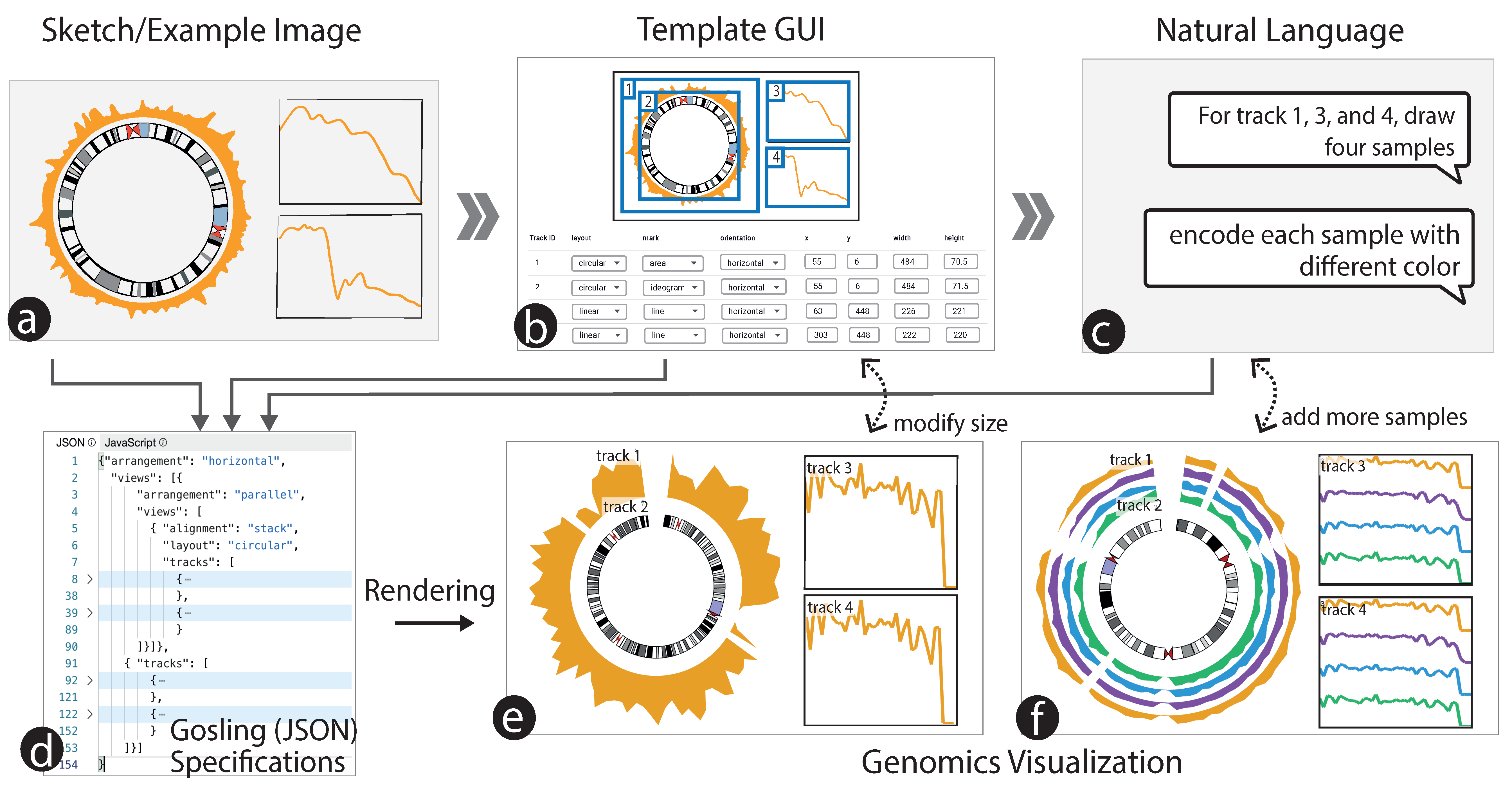

Visualization plays an important role in extracting insights from complex and large-scale genomics data. Traditional graphical user interfaces (GUIs) offer limited flexibility for custom visualizations. Our prior work, Gosling, enables expressive visualization creation using a grammar-based approach, but beginners may face challenges in constructing complex visualizations. To address this, we explore multimodal interactions, including sketches, example images, and natural language inputs, to streamline visualization creation. Specifically, we customize two deep learning models (YOLO v7 and GPT3.5) to interpret user interactions and convert them into Gosling specifications. A workflow is proposed to progressively introduce and integrate multimodal interactions. We then present use cases demonstrating their effectiveness and identify challenges and opportunities for future research.

Recommended citation: Qianwen Wang∗, Xiao Liu∗, Man Qing Liang∗, Sehi L’Yi, and Nils Gehlenborg (Oct. 2023). “Enabling Multimodal User Interactions for Genomics Visualization Creation”. 2023 IEEE Visualization and Visual Analytics (VIS). IEEE. doi: 10.1109/vis54172.2023.00031. https://doi.ieeecomputersociety.org/10.1109/VIS54172.2023.00031